Code

import pandas as pdDouglas K. G. Araujo

This notebook showcases one possible use of gingado by estimating economic growth across countries, using the dataset studied by Barro and Lee (1994). You can run this notebook interactively, by clicking on the appropriate link above.

This dataset has been widely studied in economics. Belloni, Chernozhukov, and Hansen (2011) and Giannone, Lenza, and Primiceri (2021) are two studies of this dataset that are most related to machine learning.

This notebook will use gingado to compare quickly setup a well-performing machine learning model and use its results as evidence to support the conditional convergence hypothesis; compare different classes of models (and their combination in a single model), and use and document the best performing alternative.

Because the notebook is for pedagogical purposes only, please bear in mind some aspects of the machine learning workflow (such as carefully thinking about the cross-validation strategy) are glossed over in this notebook. Also, only the key academic references are cited; more references can be found in the papers mentioned in this example.

For a more thorough description of gingado, please refer to the package’s website and to the academic material about it.

We will import packages as the work progresses. This will help highlight the specific steps in the workflow that gingado can be helpful with.

The data is available in the online annex to Giannone, Lenza, and Primiceri (2021). In that paper, this dataset corresponds to what the authors call “macro2”. The original data, along with more information on the variables, can be found in this NBER website. A very helpful codebook is found in this repo.

The dataset contains explanatory variables representing per-capita growth between 1960 and 1985, for 90 countries.

Index(['Unnamed: 0', 'gdpsh465', 'bmp1l', 'freeop', 'freetar', 'h65', 'hm65',

'hf65', 'p65', 'pm65', 'pf65', 's65', 'sm65', 'sf65', 'fert65',

'mort65', 'lifee065', 'gpop1', 'fert1', 'mort1', 'invsh41', 'geetot1',

'geerec1', 'gde1', 'govwb1', 'govsh41', 'gvxdxe41', 'high65', 'highm65',

'highf65', 'highc65', 'highcm65', 'highcf65', 'human65', 'humanm65',

'humanf65', 'hyr65', 'hyrm65', 'hyrf65', 'no65', 'nom65', 'nof65',

'pinstab1', 'pop65', 'worker65', 'pop1565', 'pop6565', 'sec65',

'secm65', 'secf65', 'secc65', 'seccm65', 'seccf65', 'syr65', 'syrm65',

'syrf65', 'teapri65', 'teasec65', 'ex1', 'im1', 'xr65', 'tot1'],

dtype='object')| 0 | 1 | 2 | 3 | 4 | |

|---|---|---|---|---|---|

| Unnamed: 0 | 0.000000 | 1.000000 | 2.000000 | 3.000000 | 4.000000 |

| gdpsh465 | 6.591674 | 6.829794 | 8.895082 | 7.565275 | 7.162397 |

| bmp1l | 0.283700 | 0.614100 | 0.000000 | 0.199700 | 0.174000 |

| freeop | 0.153491 | 0.313509 | 0.204244 | 0.248714 | 0.299252 |

| freetar | 0.043888 | 0.061827 | 0.009186 | 0.036270 | 0.037367 |

| ... | ... | ... | ... | ... | ... |

| teasec65 | 17.300000 | 18.000000 | 20.700000 | 22.700000 | 17.600000 |

| ex1 | 0.072900 | 0.094000 | 0.174100 | 0.126500 | 0.121100 |

| im1 | 0.066700 | 0.143800 | 0.175000 | 0.149600 | 0.130800 |

| xr65 | 0.348000 | 0.525000 | 1.082000 | 6.625000 | 2.500000 |

| tot1 | -0.014727 | 0.005750 | -0.010040 | -0.002195 | 0.003283 |

62 rows × 5 columns

The outcome variable is represented here:

Generally speaking, it is a good idea to establish a benchmark model at the first stages of development of the machine learning model. gingado offers a class of automatic benchmarks that can be used off-the-shelf depending on the task at hand: RegressionBenchmark and ClassificationBenchmark. It is also good to keep in mind that more advanced users can create their own benchmark on top of a base class provided by gingado: ggdBenchmark.

For this application, since we are interested in running a regression task, we will use RegressionBenchmark:

What this object does is the following:

it creates a random forest

three different versions of the random forest are trained on the user data

the version that performs better is chosen as the benchmark

right after it is trained, the benchmark is documented using gingado’s ModelCard documenter.

The user can easily change the parameters above. For example, instead of a random forest the user might prefer a neural network as the benchmark. Or, in lieu of the default parameters provided by gingado, users might have their own idea of what could be a reasonable parameter space to search.

Random forests are chosen as the go-to benchmark algorithm because of their reasonably good performance in a wide variety of settings, the fact that they don’t require much data transformation (ie, normalising the data to have zero mean and one standard deviation), and by virtue of their relatively transparency about the importance of each regressor.

The first step is to initialise the benchmark object. At this time, we pass some arguments about how we want it to behave. In this case, we set the verbosity level to produce output related to each alternative considered. Then we fit it to the data.

RandomForestRegressor()Fitting 5 folds for each of 6 candidates, totalling 30 fits

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=250; total time= 0.5s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.5s

[CV] END ................max_features=None, n_estimators=250; total time= 0.5sRegressionBenchmark(cv=StratifiedShuffleSplit(n_splits=10, random_state=None, test_size=None,

train_size=None),

verbose_grid=2)As we can see above, with a few lines we have trained a random forest on the dataset. In this case, the benchmark was the better of six versions of the random forest, according to the default hyperparameters: 100 and 250 estimators were alternated with models for which the maximum number of regressors analysed by individual trees changesd fom the maximum, a square root and a log of the number of regressors. They were each trained using a 5-fold cross-validation.

Let’s see which one was the best performing in this case, and hence our benchmark model:

| 0 | 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|---|

| mean_fit_time | 0.127842 | 0.267661 | 0.106398 | 0.268515 | 0.163344 | 0.43746 |

| std_fit_time | 0.011492 | 0.015168 | 0.004224 | 0.01286 | 0.009028 | 0.006711 |

| mean_score_time | 0.007325 | 0.013783 | 0.005755 | 0.014542 | 0.005953 | 0.015245 |

| std_score_time | 0.001457 | 0.000516 | 0.000381 | 0.001177 | 0.000455 | 0.001707 |

| param_max_features | sqrt | sqrt | log2 | log2 | None | None |

| param_n_estimators | 100 | 250 | 100 | 250 | 100 | 250 |

| params | {'max_features': 'sqrt', 'n_estimators': 100} | {'max_features': 'sqrt', 'n_estimators': 250} | {'max_features': 'log2', 'n_estimators': 100} | {'max_features': 'log2', 'n_estimators': 250} | {'max_features': None, 'n_estimators': 100} | {'max_features': None, 'n_estimators': 250} |

| split0_test_score | -0.16933 | -0.092086 | -0.122926 | -0.211516 | -0.108522 | -0.160882 |

| split1_test_score | -0.295742 | -0.277869 | -0.334904 | -0.267957 | -0.353692 | -0.329935 |

| split2_test_score | 0.204602 | 0.085291 | -0.037415 | 0.082589 | 0.404614 | 0.411464 |

| split3_test_score | -0.728921 | -0.573613 | -0.623305 | -0.757371 | -0.471002 | -0.460872 |

| split4_test_score | 0.137501 | 0.126744 | 0.01719 | 0.114779 | 0.125886 | 0.098748 |

| mean_test_score | -0.170378 | -0.146307 | -0.220272 | -0.207895 | -0.080543 | -0.088295 |

| std_test_score | 0.335585 | 0.257306 | 0.234468 | 0.314339 | 0.31807 | 0.312159 |

| rank_test_score | 4 | 3 | 6 | 5 | 1 | 2 |

The values above are calculated with \(R^2\), the default scoring function for a random forest from the scikit-learn package. Suppose that instead we would like a benchmark model that is optimised on the maximum error, ie a benchmark that minimises the worst deviation from prediction to ground truth for all the sample. These are the steps that we would take. Note that a more complete list of ready-made scoring parameters and how to create your own function can be found here.

Fitting 5 folds for each of 6 candidates, totalling 30 fits

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.2s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=250; total time= 0.5s

[CV] END ................max_features=None, n_estimators=250; total time= 0.5s

[CV] END ................max_features=None, n_estimators=250; total time= 0.5s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4sRegressionBenchmark(cv=StratifiedShuffleSplit(n_splits=10, random_state=None, test_size=None,

train_size=None),

scoring='max_error', verbose_grid=2)| 0 | 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|---|

| mean_fit_time | 0.125901 | 0.284121 | 0.116043 | 0.25507 | 0.16534 | 0.446526 |

| std_fit_time | 0.010974 | 0.013291 | 0.003123 | 0.002374 | 0.006095 | 0.029097 |

| mean_score_time | 0.006425 | 0.013692 | 0.006476 | 0.013916 | 0.005643 | 0.015142 |

| std_score_time | 0.000569 | 0.000061 | 0.000596 | 0.00071 | 0.000369 | 0.001178 |

| param_max_features | sqrt | sqrt | log2 | log2 | None | None |

| param_n_estimators | 100 | 250 | 100 | 250 | 100 | 250 |

| params | {'max_features': 'sqrt', 'n_estimators': 100} | {'max_features': 'sqrt', 'n_estimators': 250} | {'max_features': 'log2', 'n_estimators': 100} | {'max_features': 'log2', 'n_estimators': 250} | {'max_features': None, 'n_estimators': 100} | {'max_features': None, 'n_estimators': 250} |

| split0_test_score | -0.091383 | -0.09865 | -0.089205 | -0.089668 | -0.099687 | -0.100254 |

| split1_test_score | -0.13291 | -0.131386 | -0.126901 | -0.131514 | -0.168115 | -0.166914 |

| split2_test_score | -0.105365 | -0.103247 | -0.107508 | -0.110758 | -0.087416 | -0.089252 |

| split3_test_score | -0.156452 | -0.154446 | -0.155642 | -0.158177 | -0.154062 | -0.154419 |

| split4_test_score | -0.12944 | -0.122188 | -0.121306 | -0.128108 | -0.137235 | -0.123249 |

| mean_test_score | -0.12311 | -0.121983 | -0.120112 | -0.123645 | -0.129303 | -0.126818 |

| std_test_score | 0.022668 | 0.020188 | 0.022018 | 0.022781 | 0.031029 | 0.029997 |

| rank_test_score | 3 | 2 | 1 | 4 | 6 | 5 |

Now we even have two benchmark models.

We could further tweak and adjust them, but one of the ideas behind having a benchmark is that it is simple and easy to set up.

Let’s retain only the first benchmark, for simplicity, and now look at the predictions, comparing them to the original growth values.

/Users/douglasaraujo/Coding/.venv_gingado/lib/python3.10/site-packages/sklearn/base.py:443: UserWarning: X has feature names, but RandomForestRegressor was fitted without feature names

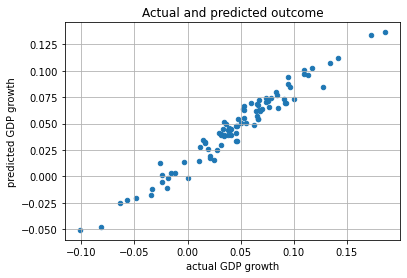

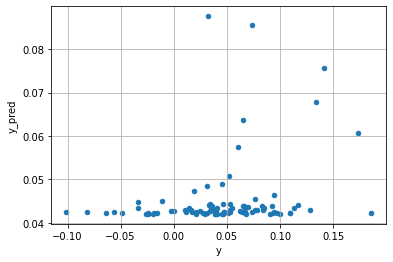

warnings.warn(<AxesSubplot:title={'center':'Actual and predicted outcome'}, xlabel='actual GDP growth', ylabel='predicted GDP growth'>

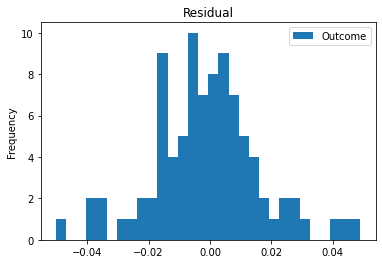

And now a histogram of the benchmark’s errors:

<AxesSubplot:title={'center':'Residual'}, ylabel='Frequency'>

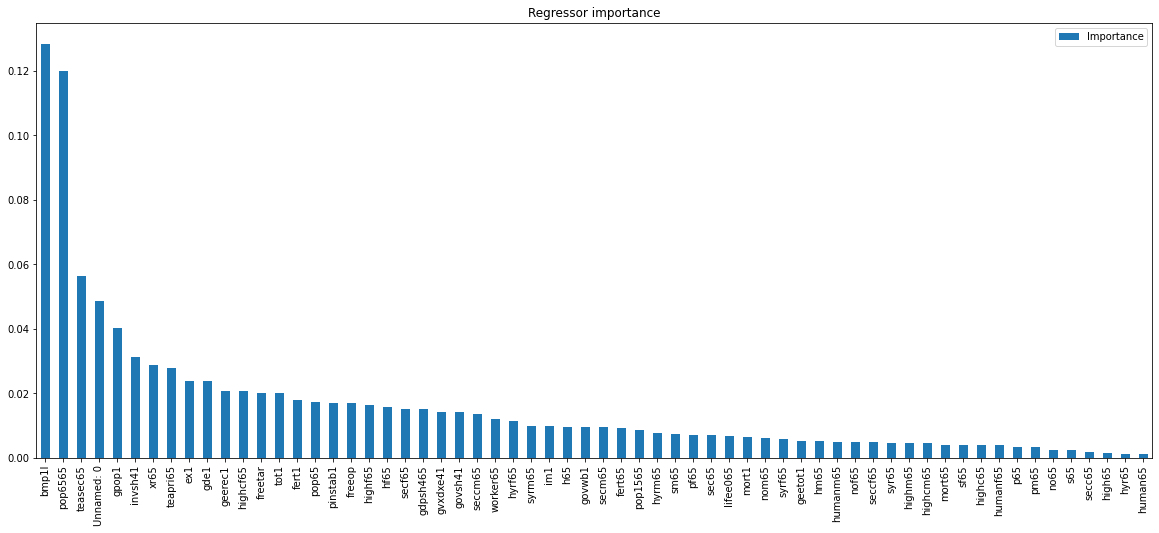

Since the benchmark is a random forest model, we can see what are the most important regressors, measured as the average reduction in impurity across the trees in the random forest that actually use that particular regressor. They are scaled so that the sum for all features is one. Higher importance amounts indicate that that particular regressor is a more important contributor to the final prediction.

<AxesSubplot:title={'center':'Regressor importance'}>

From the graph above, we can see that the regressor bmp1l (black-market premium on foreign exchange) predominates. Interestingly, Belloni, Chernozhukov, and Hansen (2011) using squared-root lasso also find this regressor to be important.

Now we can leverage our automatic benchmark model to test the conditional converge hypothesis - ie, the preposition that countries with lower starting GDP tend to grow faster than other comparable countries. In other words, this hypothesis predicts that when GDP growth is regressed on the level of past GDP and on an adequate set of covariates \(X\), the coefficient on past GDP levels are negative.

Since we have the results for the importance of each regressor in separating countries by their growth result, we can compare the estimated coefficient for GDP levels in regressions that include different regressors in the vector \(X\). To maintain this example a simple exercise, the following three models are estimated:

A result that would be consistent with the conditionality of the conditional convergence hypothesis is the first equation resulting in a negative coefficient for starting GDP, while the following two equations may not necessarily be successful in identifying a negative coefficient. This is because the least important regressors are not likely to have sufficient predictive power to separate countries into comparable groups.

The five more and less important regressors are:

( Importance

bmp1l 0.128438

pop6565 0.120012

teasec65 0.056266

Unnamed: 0 0.048724

gpop1 0.040157,

Importance

human65 0.001123

hyr65 0.001158

high65 0.001531

secc65 0.001928

s65 0.002282)| const | gdpsh465 | bmp1l | pop6565 | teasec65 | Unnamed: 0 | gpop1 | |

|---|---|---|---|---|---|---|---|

| 0 | 1.0 | 6.591674 | 0.2837 | 0.027591 | 17.3 | 0 | 0.0203 |

| 1 | 1.0 | 6.829794 | 0.6141 | 0.035637 | 18.0 | 1 | 0.0185 |

| 2 | 1.0 | 8.895082 | 0.0000 | 0.076685 | 20.7 | 2 | 0.0188 |

| 3 | 1.0 | 7.565275 | 0.1997 | 0.031039 | 22.7 | 3 | 0.0345 |

| 4 | 1.0 | 7.162397 | 0.1740 | 0.026281 | 17.6 | 4 | 0.0310 |

| const | gdpsh465 | human65 | hyr65 | high65 | secc65 | s65 | |

|---|---|---|---|---|---|---|---|

| 0 | 1.0 | 6.591674 | 0.301 | 0.004 | 0.12 | 0.13 | 0.04 |

| 1 | 1.0 | 6.829794 | 0.706 | 0.027 | 0.70 | 1.36 | 0.16 |

| 2 | 1.0 | 8.895082 | 8.317 | 0.424 | 16.67 | 15.68 | 0.56 |

| 3 | 1.0 | 7.565275 | 3.833 | 0.104 | 3.10 | 2.76 | 0.24 |

| 4 | 1.0 | 7.162397 | 1.900 | 0.022 | 0.67 | 2.17 | 0.17 |

| const | gdpsh465 | |

|---|---|---|

| 0 | 1.0 | 6.591674 |

| 1 | 1.0 | 6.829794 |

| 2 | 1.0 | 8.895082 |

| 3 | 1.0 | 7.565275 |

| 4 | 1.0 | 7.162397 |

| topfive | bottomfive | onlyGDPlevel | |

|---|---|---|---|

| [0.025 | -0.033143 | -0.037257 | -0.010810 |

| coef on GDP levels | -0.016378 | -0.014432 | 0.001317 |

| 0.975] | 0.000387 | 0.008394 | 0.013444 |

The equation using the top five regressors in explanatory power yielded a coefficient that is statistically speaking negative under the usual confidence interval levels. In contrast, the regression using the bottom five regressors failed to maintain that level of statistical significance (although the coefficient point estimate was still negative). And finally the regression on GDP level solely resulted, as in the past literature, on a point estimate that is also statistically not different than zero.

These results above offer a different way to add evidence to the conditional convergence hypothesis. In particular, with the help of gingado’s RegressionBenchmark model, it is possible to identify which covariates can meaningfully serve as covariates in a growth equation from those that cannot. This is important because if the covariate selection for some reason included only variables with little explanatory power instead of the most relevant ones, an economist might erroneously reach a different conclusion.

Importantly for model documentation, the benchmark already has some baseline documentation set up. If the user wishes, they can use that as a basis to document their model. Note that the output is in a raw format that is suitable for machine reading and writing. Intermediary and advanced users may wish to use that format to construct personalised forms, documents, etc.

{'model_details': {'developer': 'Person or organisation developing the model',

'datetime': '2022-09-24 00:46:53 ',

'version': 'Model version',

'type': 'Model type',

'info': {'_estimator_type': 'regressor',

'best_estimator_': RandomForestRegressor(max_features=None, oob_score=True),

'best_index_': 4,

'best_params_': {'max_features': None, 'n_estimators': 100},

'best_score_': -0.08054342152726568,

'cv_results_': {'mean_fit_time': array([0.12784224, 0.26766114, 0.10639763, 0.26851478, 0.16334381,

0.43746042]),

'std_fit_time': array([0.01149181, 0.0151677 , 0.00422391, 0.01286013, 0.00902753,

0.00671144]),

'mean_score_time': array([0.00732503, 0.01378322, 0.00575457, 0.01454234, 0.00595331,

0.01524515]),

'std_score_time': array([0.00145713, 0.00051617, 0.00038052, 0.00117699, 0.00045451,

0.00170701]),

'param_max_features': masked_array(data=['sqrt', 'sqrt', 'log2', 'log2', None, None],

mask=[False, False, False, False, False, False],

fill_value='?',

dtype=object),

'param_n_estimators': masked_array(data=[100, 250, 100, 250, 100, 250],

mask=[False, False, False, False, False, False],

fill_value='?',

dtype=object),

'params': [{'max_features': 'sqrt', 'n_estimators': 100},

{'max_features': 'sqrt', 'n_estimators': 250},

{'max_features': 'log2', 'n_estimators': 100},

{'max_features': 'log2', 'n_estimators': 250},

{'max_features': None, 'n_estimators': 100},

{'max_features': None, 'n_estimators': 250}],

'split0_test_score': array([-0.16933021, -0.09208619, -0.12292621, -0.21151594, -0.10852241,

-0.16088226]),

'split1_test_score': array([-0.2957416 , -0.27786931, -0.33490436, -0.26795696, -0.35369235,

-0.32993453]),

'split2_test_score': array([ 0.20460155, 0.08529122, -0.03741542, 0.08258917, 0.40461419,

0.41146413]),

'split3_test_score': array([-0.72892109, -0.5736127 , -0.62330486, -0.75737116, -0.4710023 ,

-0.46087249]),

'split4_test_score': array([0.13750078, 0.12674359, 0.01719048, 0.11477866, 0.12588575,

0.09874811]),

'mean_test_score': array([-0.17037811, -0.14630668, -0.22027207, -0.20789524, -0.08054342,

-0.08829541]),

'std_test_score': array([0.33558501, 0.25730646, 0.23446843, 0.31433865, 0.31806987,

0.31215921]),

'rank_test_score': array([4, 3, 6, 5, 1, 2], dtype=int32)},

'multimetric_': False,

'n_features_in_': 62,

'n_splits_': 5,

'refit_time_': 0.21793103218078613,

'scorer_': <function sklearn.metrics._scorer._passthrough_scorer(estimator, *args, **kwargs)>},

'paper': 'Paper or other resource for more information',

'citation': 'Citation details',

'license': 'License',

'contact': 'Where to send questions or comments about the model'},

'intended_use': {'primary_uses': 'Primary intended uses',

'primary_users': 'Primary intended users',

'out_of_scope': 'Out-of-scope use cases'},

'factors': {'relevant': 'Relevant factors',

'evaluation': 'Evaluation factors'},

'metrics': {'performance_measures': 'Model performance measures',

'thresholds': 'Decision thresholds',

'variation_approaches': 'Variation approaches'},

'evaluation_data': {'datasets': 'Datasets',

'motivation': 'Motivation',

'preprocessing': 'Preprocessing'},

'training_data': {'training_data': 'Information on training data'},

'quant_analyses': {'unitary': 'Unitary results',

'intersectional': 'Intersectional results'},

'ethical_considerations': {'sensitive_data': 'Does the model use any sensitive data (e.g., protected classes)?',

'human_life': 'Is the model intended to inform decisions about mat- ters central to human life or flourishing - e.g., health or safety? Or could it be used in such a way?',

'mitigations': 'What risk mitigation strategies were used during model development?',

'risks_and_harms': '\n What risks may be present in model usage? Try to identify the potential recipients, likelihood, and magnitude of harms. \n If these cannot be determined, note that they were consid- ered but remain unknown',

'use_cases': 'Are there any known model use cases that are especially fraught?',

'additional_information': '\n If possible, this section should also include any additional ethical considerations that went into model development, \n for example, review by an external board, or testing with a specific community.'},

'caveats_recommendations': {'caveats': 'For example, did the results suggest any further testing? Were there any relevant groups that were not represented in the evaluation dataset?',

'recommendations': 'Are there additional recommendations for model use? What are the ideal characteristics of an evaluation dataset for this model?'}}Since there is some information in the model documentation that was automatically added, we might want to concentrate on the fields in the model card that are yet to be answered. Actually, this is the purpose of gingado’s automatic documentation: to afford users more time so they can invest, if they want, on model documentation.

['model_details__developer',

'model_details__version',

'model_details__type',

'model_details__paper',

'model_details__citation',

'model_details__license',

'model_details__contact',

'intended_use__primary_uses',

'intended_use__primary_users',

'intended_use__out_of_scope',

'factors__relevant',

'factors__evaluation',

'metrics__performance_measures',

'metrics__thresholds',

'metrics__variation_approaches',

'evaluation_data__datasets',

'evaluation_data__motivation',

'evaluation_data__preprocessing',

'training_data__training_data',

'quant_analyses__unitary',

'quant_analyses__intersectional',

'ethical_considerations__sensitive_data',

'ethical_considerations__human_life',

'ethical_considerations__mitigations',

'ethical_considerations__risks_and_harms',

'ethical_considerations__use_cases',

'ethical_considerations__additional_information',

'caveats_recommendations__caveats',

'caveats_recommendations__recommendations']Let’s fill some information:

Note the format, based on a Python dictionary. In particular, the open_questions method results include keys divided by double underscores. As seen above, these should be interpreted as different levels of the documentation template, leading to a nested dictionary.

Now when we confirm that the questions answered above are no longer “open questions”:

['model_details__developer',

'model_details__version',

'model_details__type',

'model_details__paper',

'model_details__citation',

'model_details__license',

'model_details__contact',

'intended_use__out_of_scope',

'factors__relevant',

'factors__evaluation',

'metrics__performance_measures',

'metrics__thresholds',

'metrics__variation_approaches',

'evaluation_data__datasets',

'evaluation_data__motivation',

'evaluation_data__preprocessing',

'training_data__training_data',

'quant_analyses__unitary',

'quant_analyses__intersectional',

'ethical_considerations__sensitive_data',

'ethical_considerations__human_life',

'ethical_considerations__mitigations',

'ethical_considerations__risks_and_harms',

'ethical_considerations__use_cases',

'ethical_considerations__additional_information',

'caveats_recommendations__caveats',

'caveats_recommendations__recommendations']If we want, at any time we can save the documentation to a local JSON file, as well as read another document.

The benchmark model may be enough for some analyses, or maybe the user is interested in using the benchmark to explore the data and have an understanding of the importance of each regressor, to concentrate their work on data that can be meaningful for their purposes. But oftentimes a user will want to seek a machine learning model that performs as well as possible.

For users that want to manually create other models, gingado allows the possibility of comparing them with the benchmark. If the user model is better, it becomes the new benchmark!

For the following analyses, we will use K-fold as cross-validation, with 5 splits of the sample.

Fitting 5 folds for each of 9 candidates, totalling 45 fits

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.3s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.3s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

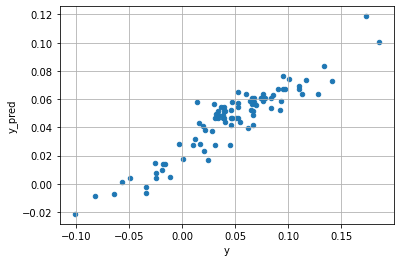

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s<AxesSubplot:xlabel='y', ylabel='y_pred'>

Fitting 5 folds for each of 3 candidates, totalling 15 fits

[CV] END ..........................................alpha=0.5; total time= 0.0s

[CV] END ..........................................alpha=0.5; total time= 0.0s

[CV] END ..........................................alpha=0.5; total time= 0.0s

[CV] END ..........................................alpha=0.5; total time= 0.0s

[CV] END ..........................................alpha=0.5; total time= 0.0s

[CV] END ............................................alpha=1; total time= 0.0s

[CV] END ............................................alpha=1; total time= 0.0s

[CV] END ............................................alpha=1; total time= 0.0s

[CV] END ............................................alpha=1; total time= 0.0s

[CV] END ............................................alpha=1; total time= 0.0s

[CV] END .........................................alpha=1.25; total time= 0.0s

[CV] END .........................................alpha=1.25; total time= 0.0s

[CV] END .........................................alpha=1.25; total time= 0.0s

[CV] END .........................................alpha=1.25; total time= 0.0s

[CV] END .........................................alpha=1.25; total time= 0.0s<AxesSubplot:xlabel='y', ylabel='y_pred'>

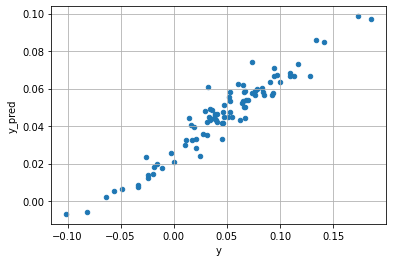

gingado allows users to compare different candidate models with the existing benchmark in a very simple way: using the compare method.

Fitting 5 folds for each of 4 candidates, totalling 20 fits

Fitting 5 folds for each of 6 candidates, totalling 30 fits

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.2s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END candidate_estimator=GridSearchCV(estimator=RandomForestRegressor(oob_score=True),

param_grid={'max_features': ['sqrt', 'log2', None],

'n_estimators': [100, 250]},

verbose=2), candidate_estimator__cv=None, candidate_estimator__error_score=nan, candidate_estimator__estimator=RandomForestRegressor(oob_score=True), candidate_estimator__estimator__bootstrap=True, candidate_estimator__estimator__ccp_alpha=0.0, candidate_estimator__estimator__criterion=squared_error, candidate_estimator__estimator__max_depth=None, candidate_estimator__estimator__max_features=1.0, candidate_estimator__estimator__max_leaf_nodes=None, candidate_estimator__estimator__max_samples=None, candidate_estimator__estimator__min_impurity_decrease=0.0, candidate_estimator__estimator__min_samples_leaf=1, candidate_estimator__estimator__min_samples_split=2, candidate_estimator__estimator__min_weight_fraction_leaf=0.0, candidate_estimator__estimator__n_estimators=100, candidate_estimator__estimator__n_jobs=None, candidate_estimator__estimator__oob_score=True, candidate_estimator__estimator__random_state=None, candidate_estimator__estimator__verbose=0, candidate_estimator__estimator__warm_start=False, candidate_estimator__n_jobs=None, candidate_estimator__param_grid={'n_estimators': [100, 250], 'max_features': ['sqrt', 'log2', None]}, candidate_estimator__pre_dispatch=2*n_jobs, candidate_estimator__refit=True, candidate_estimator__return_train_score=False, candidate_estimator__scoring=None, candidate_estimator__verbose=2; total time= 7.0s

Fitting 5 folds for each of 6 candidates, totalling 30 fits

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.1s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END candidate_estimator=GridSearchCV(estimator=RandomForestRegressor(oob_score=True),

param_grid={'max_features': ['sqrt', 'log2', None],

'n_estimators': [100, 250]},

verbose=2), candidate_estimator__cv=None, candidate_estimator__error_score=nan, candidate_estimator__estimator=RandomForestRegressor(oob_score=True), candidate_estimator__estimator__bootstrap=True, candidate_estimator__estimator__ccp_alpha=0.0, candidate_estimator__estimator__criterion=squared_error, candidate_estimator__estimator__max_depth=None, candidate_estimator__estimator__max_features=1.0, candidate_estimator__estimator__max_leaf_nodes=None, candidate_estimator__estimator__max_samples=None, candidate_estimator__estimator__min_impurity_decrease=0.0, candidate_estimator__estimator__min_samples_leaf=1, candidate_estimator__estimator__min_samples_split=2, candidate_estimator__estimator__min_weight_fraction_leaf=0.0, candidate_estimator__estimator__n_estimators=100, candidate_estimator__estimator__n_jobs=None, candidate_estimator__estimator__oob_score=True, candidate_estimator__estimator__random_state=None, candidate_estimator__estimator__verbose=0, candidate_estimator__estimator__warm_start=False, candidate_estimator__n_jobs=None, candidate_estimator__param_grid={'n_estimators': [100, 250], 'max_features': ['sqrt', 'log2', None]}, candidate_estimator__pre_dispatch=2*n_jobs, candidate_estimator__refit=True, candidate_estimator__return_train_score=False, candidate_estimator__scoring=None, candidate_estimator__verbose=2; total time= 6.9s

Fitting 5 folds for each of 6 candidates, totalling 30 fits

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=None, n_estimators=100; total time= 0.1s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.1s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END candidate_estimator=GridSearchCV(estimator=RandomForestRegressor(oob_score=True),

param_grid={'max_features': ['sqrt', 'log2', None],

'n_estimators': [100, 250]},

verbose=2), candidate_estimator__cv=None, candidate_estimator__error_score=nan, candidate_estimator__estimator=RandomForestRegressor(oob_score=True), candidate_estimator__estimator__bootstrap=True, candidate_estimator__estimator__ccp_alpha=0.0, candidate_estimator__estimator__criterion=squared_error, candidate_estimator__estimator__max_depth=None, candidate_estimator__estimator__max_features=1.0, candidate_estimator__estimator__max_leaf_nodes=None, candidate_estimator__estimator__max_samples=None, candidate_estimator__estimator__min_impurity_decrease=0.0, candidate_estimator__estimator__min_samples_leaf=1, candidate_estimator__estimator__min_samples_split=2, candidate_estimator__estimator__min_weight_fraction_leaf=0.0, candidate_estimator__estimator__n_estimators=100, candidate_estimator__estimator__n_jobs=None, candidate_estimator__estimator__oob_score=True, candidate_estimator__estimator__random_state=None, candidate_estimator__estimator__verbose=0, candidate_estimator__estimator__warm_start=False, candidate_estimator__n_jobs=None, candidate_estimator__param_grid={'n_estimators': [100, 250], 'max_features': ['sqrt', 'log2', None]}, candidate_estimator__pre_dispatch=2*n_jobs, candidate_estimator__refit=True, candidate_estimator__return_train_score=False, candidate_estimator__scoring=None, candidate_estimator__verbose=2; total time= 6.4s

Fitting 5 folds for each of 6 candidates, totalling 30 fits

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=None, n_estimators=100; total time= 0.1s

[CV] END ................max_features=None, n_estimators=100; total time= 0.1s

[CV] END ................max_features=None, n_estimators=100; total time= 0.1s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.1s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END candidate_estimator=GridSearchCV(estimator=RandomForestRegressor(oob_score=True),

param_grid={'max_features': ['sqrt', 'log2', None],

'n_estimators': [100, 250]},

verbose=2), candidate_estimator__cv=None, candidate_estimator__error_score=nan, candidate_estimator__estimator=RandomForestRegressor(oob_score=True), candidate_estimator__estimator__bootstrap=True, candidate_estimator__estimator__ccp_alpha=0.0, candidate_estimator__estimator__criterion=squared_error, candidate_estimator__estimator__max_depth=None, candidate_estimator__estimator__max_features=1.0, candidate_estimator__estimator__max_leaf_nodes=None, candidate_estimator__estimator__max_samples=None, candidate_estimator__estimator__min_impurity_decrease=0.0, candidate_estimator__estimator__min_samples_leaf=1, candidate_estimator__estimator__min_samples_split=2, candidate_estimator__estimator__min_weight_fraction_leaf=0.0, candidate_estimator__estimator__n_estimators=100, candidate_estimator__estimator__n_jobs=None, candidate_estimator__estimator__oob_score=True, candidate_estimator__estimator__random_state=None, candidate_estimator__estimator__verbose=0, candidate_estimator__estimator__warm_start=False, candidate_estimator__n_jobs=None, candidate_estimator__param_grid={'n_estimators': [100, 250], 'max_features': ['sqrt', 'log2', None]}, candidate_estimator__pre_dispatch=2*n_jobs, candidate_estimator__refit=True, candidate_estimator__return_train_score=False, candidate_estimator__scoring=None, candidate_estimator__verbose=2; total time= 6.7s

Fitting 5 folds for each of 6 candidates, totalling 30 fits

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=100; total time= 0.1s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=sqrt, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=100; total time= 0.1s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=log2, n_estimators=250; total time= 0.3s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.1s

[CV] END ................max_features=None, n_estimators=100; total time= 0.1s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=100; total time= 0.2s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END ................max_features=None, n_estimators=250; total time= 0.4s

[CV] END candidate_estimator=GridSearchCV(estimator=RandomForestRegressor(oob_score=True),

param_grid={'max_features': ['sqrt', 'log2', None],

'n_estimators': [100, 250]},

verbose=2), candidate_estimator__cv=None, candidate_estimator__error_score=nan, candidate_estimator__estimator=RandomForestRegressor(oob_score=True), candidate_estimator__estimator__bootstrap=True, candidate_estimator__estimator__ccp_alpha=0.0, candidate_estimator__estimator__criterion=squared_error, candidate_estimator__estimator__max_depth=None, candidate_estimator__estimator__max_features=1.0, candidate_estimator__estimator__max_leaf_nodes=None, candidate_estimator__estimator__max_samples=None, candidate_estimator__estimator__min_impurity_decrease=0.0, candidate_estimator__estimator__min_samples_leaf=1, candidate_estimator__estimator__min_samples_split=2, candidate_estimator__estimator__min_weight_fraction_leaf=0.0, candidate_estimator__estimator__n_estimators=100, candidate_estimator__estimator__n_jobs=None, candidate_estimator__estimator__oob_score=True, candidate_estimator__estimator__random_state=None, candidate_estimator__estimator__verbose=0, candidate_estimator__estimator__warm_start=False, candidate_estimator__n_jobs=None, candidate_estimator__param_grid={'n_estimators': [100, 250], 'max_features': ['sqrt', 'log2', None]}, candidate_estimator__pre_dispatch=2*n_jobs, candidate_estimator__refit=True, candidate_estimator__return_train_score=False, candidate_estimator__scoring=None, candidate_estimator__verbose=2; total time= 6.7s

Fitting 5 folds for each of 9 candidates, totalling 45 fits

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END candidate_estimator=GridSearchCV(estimator=GradientBoostingRegressor(), n_jobs=-1,

param_grid={'learning_rate': [0.01, 0.1, 0.25],

'max_depth': [3, 6, 9]},

verbose=2), candidate_estimator__cv=None, candidate_estimator__error_score=nan, candidate_estimator__estimator=GradientBoostingRegressor(), candidate_estimator__estimator__alpha=0.9, candidate_estimator__estimator__ccp_alpha=0.0, candidate_estimator__estimator__criterion=friedman_mse, candidate_estimator__estimator__init=None, candidate_estimator__estimator__learning_rate=0.1, candidate_estimator__estimator__loss=squared_error, candidate_estimator__estimator__max_depth=3, candidate_estimator__estimator__max_features=None, candidate_estimator__estimator__max_leaf_nodes=None, candidate_estimator__estimator__min_impurity_decrease=0.0, candidate_estimator__estimator__min_samples_leaf=1, candidate_estimator__estimator__min_samples_split=2, candidate_estimator__estimator__min_weight_fraction_leaf=0.0, candidate_estimator__estimator__n_estimators=100, candidate_estimator__estimator__n_iter_no_change=None, candidate_estimator__estimator__random_state=None, candidate_estimator__estimator__subsample=1.0, candidate_estimator__estimator__tol=0.0001, candidate_estimator__estimator__validation_fraction=0.1, candidate_estimator__estimator__verbose=0, candidate_estimator__estimator__warm_start=False, candidate_estimator__n_jobs=-1, candidate_estimator__param_grid={'learning_rate': [0.01, 0.1, 0.25], 'max_depth': [3, 6, 9]}, candidate_estimator__pre_dispatch=2*n_jobs, candidate_estimator__refit=True, candidate_estimator__return_train_score=False, candidate_estimator__scoring=None, candidate_estimator__verbose=2; total time= 0.6s

Fitting 5 folds for each of 9 candidates, totalling 45 fits

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END candidate_estimator=GridSearchCV(estimator=GradientBoostingRegressor(), n_jobs=-1,

param_grid={'learning_rate': [0.01, 0.1, 0.25],

'max_depth': [3, 6, 9]},

verbose=2), candidate_estimator__cv=None, candidate_estimator__error_score=nan, candidate_estimator__estimator=GradientBoostingRegressor(), candidate_estimator__estimator__alpha=0.9, candidate_estimator__estimator__ccp_alpha=0.0, candidate_estimator__estimator__criterion=friedman_mse, candidate_estimator__estimator__init=None, candidate_estimator__estimator__learning_rate=0.1, candidate_estimator__estimator__loss=squared_error, candidate_estimator__estimator__max_depth=3, candidate_estimator__estimator__max_features=None, candidate_estimator__estimator__max_leaf_nodes=None, candidate_estimator__estimator__min_impurity_decrease=0.0, candidate_estimator__estimator__min_samples_leaf=1, candidate_estimator__estimator__min_samples_split=2, candidate_estimator__estimator__min_weight_fraction_leaf=0.0, candidate_estimator__estimator__n_estimators=100, candidate_estimator__estimator__n_iter_no_change=None, candidate_estimator__estimator__random_state=None, candidate_estimator__estimator__subsample=1.0, candidate_estimator__estimator__tol=0.0001, candidate_estimator__estimator__validation_fraction=0.1, candidate_estimator__estimator__verbose=0, candidate_estimator__estimator__warm_start=False, candidate_estimator__n_jobs=-1, candidate_estimator__param_grid={'learning_rate': [0.01, 0.1, 0.25], 'max_depth': [3, 6, 9]}, candidate_estimator__pre_dispatch=2*n_jobs, candidate_estimator__refit=True, candidate_estimator__return_train_score=False, candidate_estimator__scoring=None, candidate_estimator__verbose=2; total time= 0.5s

Fitting 5 folds for each of 9 candidates, totalling 45 fits

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END candidate_estimator=GridSearchCV(estimator=GradientBoostingRegressor(), n_jobs=-1,

param_grid={'learning_rate': [0.01, 0.1, 0.25],

'max_depth': [3, 6, 9]},

verbose=2), candidate_estimator__cv=None, candidate_estimator__error_score=nan, candidate_estimator__estimator=GradientBoostingRegressor(), candidate_estimator__estimator__alpha=0.9, candidate_estimator__estimator__ccp_alpha=0.0, candidate_estimator__estimator__criterion=friedman_mse, candidate_estimator__estimator__init=None, candidate_estimator__estimator__learning_rate=0.1, candidate_estimator__estimator__loss=squared_error, candidate_estimator__estimator__max_depth=3, candidate_estimator__estimator__max_features=None, candidate_estimator__estimator__max_leaf_nodes=None, candidate_estimator__estimator__min_impurity_decrease=0.0, candidate_estimator__estimator__min_samples_leaf=1, candidate_estimator__estimator__min_samples_split=2, candidate_estimator__estimator__min_weight_fraction_leaf=0.0, candidate_estimator__estimator__n_estimators=100, candidate_estimator__estimator__n_iter_no_change=None, candidate_estimator__estimator__random_state=None, candidate_estimator__estimator__subsample=1.0, candidate_estimator__estimator__tol=0.0001, candidate_estimator__estimator__validation_fraction=0.1, candidate_estimator__estimator__verbose=0, candidate_estimator__estimator__warm_start=False, candidate_estimator__n_jobs=-1, candidate_estimator__param_grid={'learning_rate': [0.01, 0.1, 0.25], 'max_depth': [3, 6, 9]}, candidate_estimator__pre_dispatch=2*n_jobs, candidate_estimator__refit=True, candidate_estimator__return_train_score=False, candidate_estimator__scoring=None, candidate_estimator__verbose=2; total time= 0.6s

Fitting 5 folds for each of 9 candidates, totalling 45 fits

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=6; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END ....................learning_rate=0.25, max_depth=9; total time= 0.1s

[CV] END candidate_estimator=GridSearchCV(estimator=GradientBoostingRegressor(), n_jobs=-1,

param_grid={'learning_rate': [0.01, 0.1, 0.25],

'max_depth': [3, 6, 9]},

verbose=2), candidate_estimator__cv=None, candidate_estimator__error_score=nan, candidate_estimator__estimator=GradientBoostingRegressor(), candidate_estimator__estimator__alpha=0.9, candidate_estimator__estimator__ccp_alpha=0.0, candidate_estimator__estimator__criterion=friedman_mse, candidate_estimator__estimator__init=None, candidate_estimator__estimator__learning_rate=0.1, candidate_estimator__estimator__loss=squared_error, candidate_estimator__estimator__max_depth=3, candidate_estimator__estimator__max_features=None, candidate_estimator__estimator__max_leaf_nodes=None, candidate_estimator__estimator__min_impurity_decrease=0.0, candidate_estimator__estimator__min_samples_leaf=1, candidate_estimator__estimator__min_samples_split=2, candidate_estimator__estimator__min_weight_fraction_leaf=0.0, candidate_estimator__estimator__n_estimators=100, candidate_estimator__estimator__n_iter_no_change=None, candidate_estimator__estimator__random_state=None, candidate_estimator__estimator__subsample=1.0, candidate_estimator__estimator__tol=0.0001, candidate_estimator__estimator__validation_fraction=0.1, candidate_estimator__estimator__verbose=0, candidate_estimator__estimator__warm_start=False, candidate_estimator__n_jobs=-1, candidate_estimator__param_grid={'learning_rate': [0.01, 0.1, 0.25], 'max_depth': [3, 6, 9]}, candidate_estimator__pre_dispatch=2*n_jobs, candidate_estimator__refit=True, candidate_estimator__return_train_score=False, candidate_estimator__scoring=None, candidate_estimator__verbose=2; total time= 0.5s

Fitting 5 folds for each of 9 candidates, totalling 45 fits

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=6; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END .....................learning_rate=0.1, max_depth=3; total time= 0.1s

[CV] END ....................learning_rate=0.01, max_depth=9; total time= 0.2s

[CV] END .....................learning_rate=0.1, max_depth=6; total time= 0.2s